Big Willy Faking Influence?

Will Smith was touring the UK on his Based on a True Story tour, and ahead of one of the concerts he dropped a video titled “My favorite part of tour is seeing you all up close. Thank you for seeing me too.”

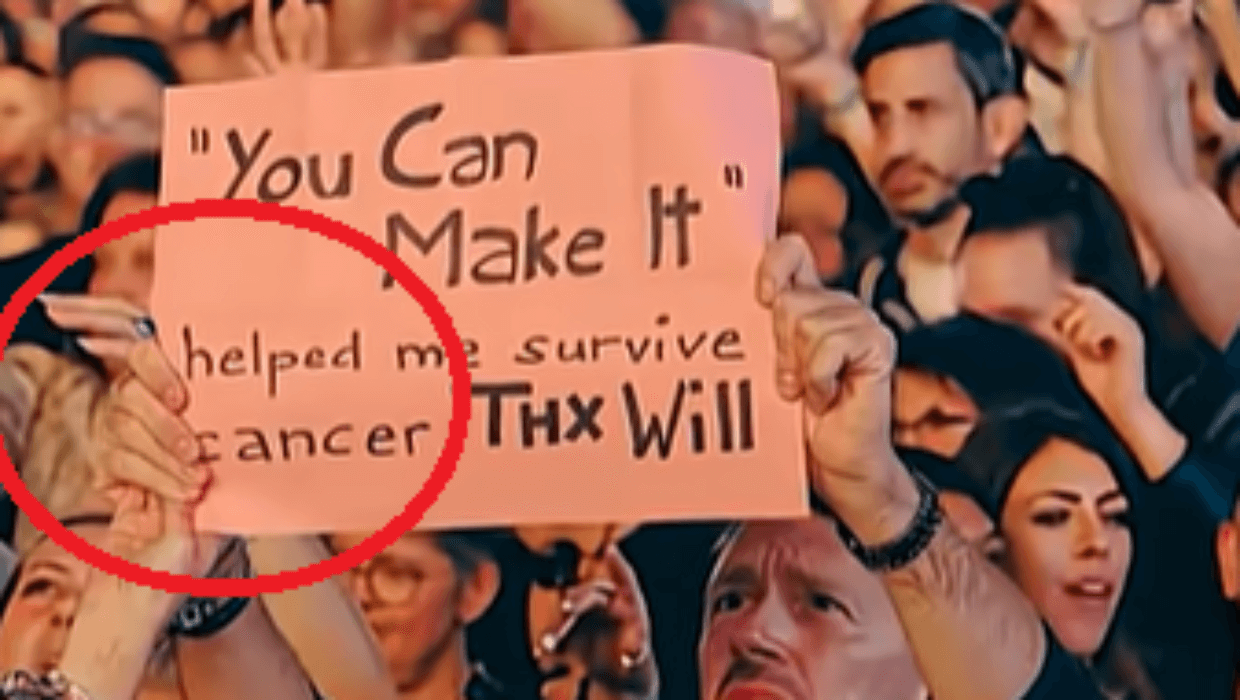

But people immediately started calling foul, they said much of the crowd footage looked heavily AI-generated, with weird distortions, folks having six fingers, faces blurred oddly, distorted hands, signs that didn’t quite align, with crowd motions that felt off, like AI was pulling the strings.

Then when accusations exploded on social media, Will didn’t apologize or deny, he leaned in. He posted a new video on Instagram showing him onstage, camera pans to the crowd, and those crowd members? All have computer-generated cat heads. Because if you’re gonna fight claims of fakeness, might as well troll, right?

When AI lets you fake “reality” so easily, the temptation is enormous. Are we about to see employees forging doctor’s notes using AI medical scans or texts? Sick receipts that look legit? People lying they were at events, hanging with people they never met, to boost their social status?

Or businesses inflating customer numbers, user engagement, or “crowd sizes” to attract investors or advertisers? Kids may say “I was with my friends in City A” when in fact they were at a concert in City B and produce AI-generated “evidence” (photos, group pics) to back it up. Does this become the new norm? I don’t know if everyone will do it, but we’ve already had a wave of AI-generated deepfakes, faked voices, and synthetic media. This isn’t a one-time glitch, it’s part of a trend.

If you aren’t involved in music or celebrity culture, you may think, “Meh, not my problem.” But that’s where you’re wrong (or at least where you might be missing something).

Once trust erodes, everything gets costlier; verification, audits, and skepticism. If customers, investors, and employees can’t trust what they see, you’ll spend more to prove your legitimacy.

A startup founder bragging about “100,000 active users” might have the tools to fake numbers; investors might pour in money, and get burned. A department lead might hesitate to believe internal reports. A CEO faces reputational risk if someone shows a “crowd shot” of your event that turns out fake. The ripple effects are huge: brand trust, legal liability, ethics, and regulatory scrutiny.

Did you ever suspect something around you was AI-faked? Were you ever part of something where folks inflated numbers or faked presence? I want to hear your experience.

How do we prevent people from faking their way into business or life? More importantly, how do we hold them accountable?

Share a thought leadership article on this and you might win our last pair of the Meta Ray-Ban AI-Powered Display Glasses & Neural Band.

If you don’t win those, you’ll still be eligible for a $250 Amazon gift card if we publish your article.

Best,

Matt Masinga

*Disclaimer: The content in this newsletter is for informational purposes only. We do not provide medical, legal, investment, or professional advice. While we do our best to ensure accuracy, some details may evolve over time or be based on third-party sources. Always do your own research and consult professionals before making decisions based on what you read here.