What’s Undermining America’s AI Arsenal?

The article below was written by one of our readers and approved for publication.

The writer will receive $500.

It argues that America’s AI progress is being held back by dirty, fragmented data that today’s systems can’t read or trust.

It also calls for a foundational shift toward clean, and interoperable AI-ready data as the true key to trustworthy and competitive AI.

Every Era Has Its Inflection Point. This One Is Ours.

Three years after ChatGPT’s debut, AI still dominates headlines. But beneath the buzz lies a sobering truth: America’s infrastructure was never built for trustworthy AI. The systems meant to power our digital dominance are built on data AI can’t read, trust, or learn from.

This isn’t a technical nuisance. It’s a strategic, national security vulnerability that no one wants to talk about. Without clean, AI-ready data, even the most advanced systems, and the enterprises and users behind them, can’t reach their full potential.

Rather than chasing better models, faster chips, and bigger compute hoping for a better result, it’s time to end the insanity loop and address the faulty assumptions behind AI.

Before doing so, let’s pause to think out loud together. I invite everyone shaping the AI landscape; experts, policymakers, and builders alike to rethink how we build, invest, and lead in an AI-driven world by answering the following questions:

- What’s my organization really doing about this?

- Are we feeding our models clean, contextual inputs or just hoping for magic?

- Why aren’t we talking more about the quality of our data instead of just chasing bigger models?

Sound crazy? It’s not. When nearly all mainframe data (COBOL, VSAM) and 85% of cloud data (unstructured PDFs, emails) are trapped in systems that AI can’t access, read, or learn from, the scale of the problem is undeniable.

Whether trapped in two disconnected data worlds or cloud-only environments, interoperable, AI-ready data isn’t just a need, it’s an urgent requirement.

In March 2024, I read an article exposing the technical debt problem. Around the same time, I watched the LLM arms race unfold; billions raised, compute scaled, infrastructure stretched. And I thought: this doesn’t make sense.

The Wall Street Journal called this “The Invisible $1.52 Trillion Dollar Problem.” So, I asked: What if the industry’s foundational premise is wrong about data, AI, and compute itself?

Silicon Valley has taught us that when the foundation is flawed, what comes next doesn’t refine the system. It rewrites it.

We’ve seen this play out before.

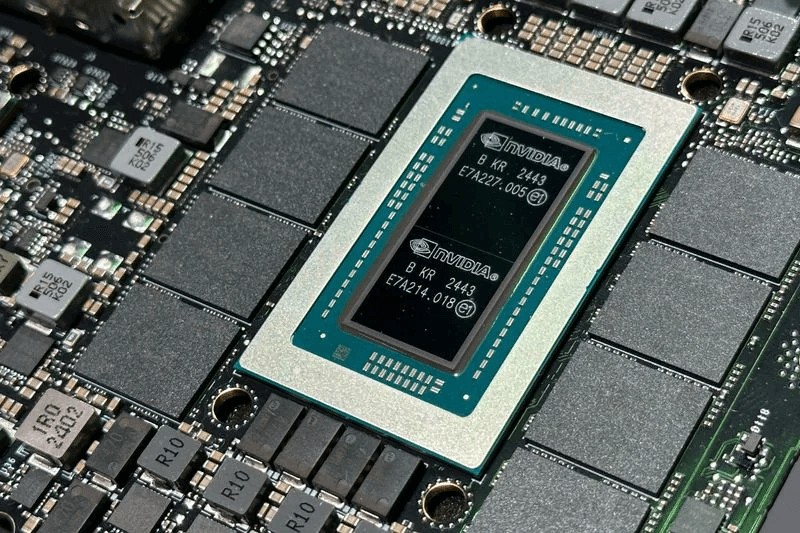

In 2012, a team of PhD candidates at the University of Toronto forever changed the trajectory of AI by rethinking what was possible. Their neural network, AlexNet, trounced the global ImageNet competition because of what it did and how it did it.

Until then, it was widely presumed that algorithms should be trained solely on CPUs. AlexNet broke that orthodoxy, exposing the limitations of serial computing and proving that no single supercomputer could efficiently run their architecture. By turning to GPUs, originally designed for video games, they unlocked a new era of parallel processing and demonstrated at scale that GPUs could radically accelerate the training of deep convolutional neural networks.

That shift redefined compute and training by accelerating processing and capacity capabilities. Today, we face a similar conundrum. The solution is better inputs and clean, AI-ready data.

Those who move first, whether LLM leaders like OpenAI and Microsoft or enterprises and governments determined to win the AI race will reshape the playing field. The choice is simple: inherit yesterday’s limitations or rewrite the foundation for tomorrow. Clean, interoperable, AI-ready data is the true foundation for trustworthy artificial intelligence; without it, data is just data until it’s clean enough for AI.

A 2024 Databricks study found that despite two-thirds of C-suite leaders desiring to integrate GenAI with proprietary data, only 22% felt their architecture could support it. The rest operate on what one respondent called “Victorian-era plumbing.” Across government and industry, teams are buried in data prep, starving AI models of clean inputs. And when LLMs train on noisy or incompatible data, they underperform, hallucinate, misfire, or fail outright.

LLMs are language-first, not data-first models that, without clean, contextual inputs, mislead and lack compliance. Producing expensive guesswork engines that drain compute, resources, and trust. Across both government and enterprise, the cost of fragmented infrastructure is an operational and economic albatross.

Even the boldest plans will falter if this foundational layer is skipped. No longer a backend concern, clean data is the front door to innovation, efficiency, and deterrence.

In the 1940’s America won the nuclear race by building something new. Visionaries like Vannevar Bush and John von Neumann accelerated wartime science by architecting the postwar future. Their legacy wasn’t optimizing old infrastructure but reinvention and innovation.

In 1971, Intel’s Ted Hoff unlocked a revolution with the 4004 microprocessor. Today, clean, AI-ready data demands the same kind of leap forward and systemic redesign. And unlike 1971, the infrastructure we need is available now.

The “clean data dividend” alone could unlock over $80 billion in annual federal savings, while solving the data problem facing corporates and individuals alike. Not in five years. Not “with additional research.” Right now.

AI dominance requires economic, strategic, and technological supremacy. At its core is Agentic AI, systems that reason, plan, and act. But capabilities are meaningless without clean data. Yet clean isn’t enough. Data must be trustworthy, scalable, and interoperable. That’s what unlocks transformation and confidence.

This is the problem that needs solving. No more disconnected systems or wasted compute. We must modernize legacy formats, flat files, and siloed systems into AI-ready infrastructure.

And the urgency is real. Today, 71% of Fortune 500 companies and 90% of top banks, insurers, airlines, and retailers remain trapped in legacy debt.

That’s why victory in the AI arms race won’t come from compute alone. It will be won by those with the clearest inputs, the strongest operational backbone, and the courage to act before outcomes are lost.

Because clarity unlocks transformation and clarity begins with data you can trust.

From government mainframes to Fortune 500 cloud sprawl, clean, AI-ready data is the critical difference between trustworthy AI that works and the crowd making headlines today.

If you want to improve your workday and corporate performance, demand clean data.

The next moonshot won’t launch from Cape Canaveral. It will launch from clean, AI-ready data.

Author Bio

David Carmell is the Founder & CEO of DATAROCKiT, the breakthrough platform that unleashes clean, trustworthy, AI-ready data in hours, not years.

I’d love your reaction, or better yet, your own article on this same topic.

Send me a short outline first. If it’s approved, you’ll write it, we’ll publish it, and you’ll get $500 within 48 hours of publication.

Add an original 30-second video summarizing your piece and an original image, and your payment jumps to $750.

Email your outline to matt@credtrus.ai

*Disclaimer: The content in this newsletter is for informational purposes only. We do not provide medical, legal, investment, or professional advice. While we do our best to ensure accuracy, some details may evolve over time or be based on third-party sources. Always do your own research and consult professionals before making decisions based on what you read here.